Hi,

I have built a scenario that scrap an xml sitemap, takes 5 first urls and scrap it.

How can I keep a local list of already scrapped urls in order to no scrap twice an url ?

Thanks a lot.

Hi,

I have built a scenario that scrap an xml sitemap, takes 5 first urls and scrap it.

How can I keep a local list of already scrapped urls in order to no scrap twice an url ?

Thanks a lot.

If you want to remove duplicates within the same execution run, you can use the deduplicate built-in function to remove duplicates from within the same array.

If you want to prevent duplicates between scenarios, you will need to store them in a store, for example, a Data store or Google Sheets. That way, you can perform a search of previously processed items from previous runs.

Hi,

That’s nice, I’m able to store already scrapped urls into a Sheets but I don’t know how to make the verification before scraping (between action id 8 and action id 7) ![]()

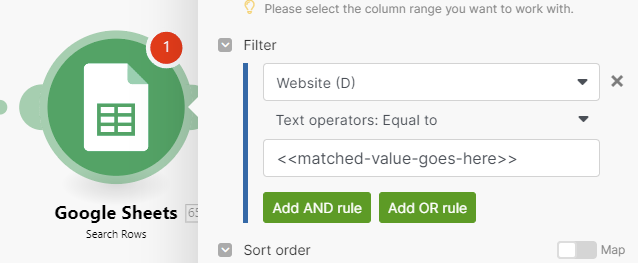

You can use a search module to see if the value is already in the spreadsheet, something like this

Then use a filter after that to only allow bundles through if no results are returned.

It’s okay, thanks a lot !