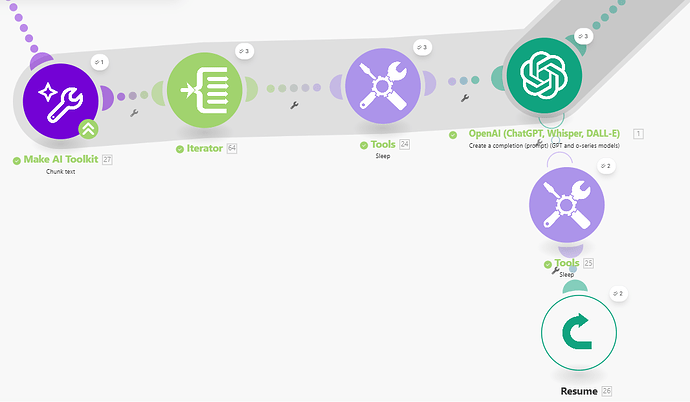

Right now, even with chunk text module and adding sleep + error handling, OpenAI still receives multiple chunks at once and fails with handle errors

SO i added Iterator after chunk text but that wasn’t helping. same error messages displayed in output.

I wanted to send one chunk at a time sequentially, not parallely. it has to extract keywords from one chunk and move to other chunk(to avoid rate limit error). the scenario is not failing but it is returning with a Rate limit error message instead of output.

Attached the pictures of the scenario, please have a look. Thank you in advance.