Hi there,

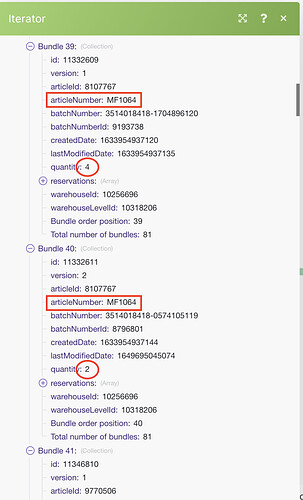

when I fetch the warehouse stock quantities from my erp system, I get some multiple bundles for same article number. The reason is, that my erp system is tracking them on a individual batch-id size, as they are cosmetics.

that means, I can get for the same product e.g. 3 bundles with different stock quantities which I then should sum to a single bundle with the combined quantity of the 3 bundles.

I am now trying that for a while, but can’t get any solution.

How could this be done?

thx

Hi @Dusticelli,

as always, there are multiple ways of doing this

You might sum them up in Google Sheets. Search rows for ID, then take the previous value + the value from this operation.

Outside of GS and in Make you can use the Array Aggregator to aggregate multiple collections into one array. Then take the sum of this array.

It is highly dependent on your workflow which solution I would choose…

Hope it helps!

Best,

Richard

2 Likes

Hello Richard,

thanks for your help. Inside GS I could sum them, but this would cause problems at other places. So I think

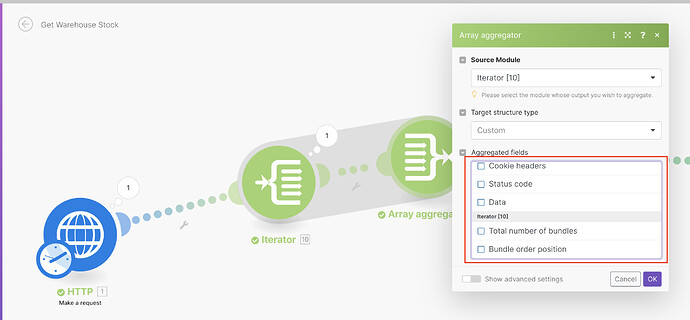

in Make you can use the Array Aggregator to aggregate multiple collections into one array. Then take the sum of this array.

that would be my solution. I tried a while with exact that module, but I think I don’t know how to use that.

When I use the array aggregator I don’t have the “value” option like it is explained in the help section from make.com and the 2 options I can choose doesn’t work.

I think I would need to tell the aggregator to take only those bundles that have same value within a key, and then, how can I tell the aggregator to use in that case another value from another key to sum together?

Can you help me a bit further with that?

thx

Hi @Dusticelli,

Did you ever find a solution to this problem? I’m struggling with the exact same and no Youtube video og documentation article seems to address this specific issue, of how to sum similar line items together for further processing.

Thanks!

Hi Lars,

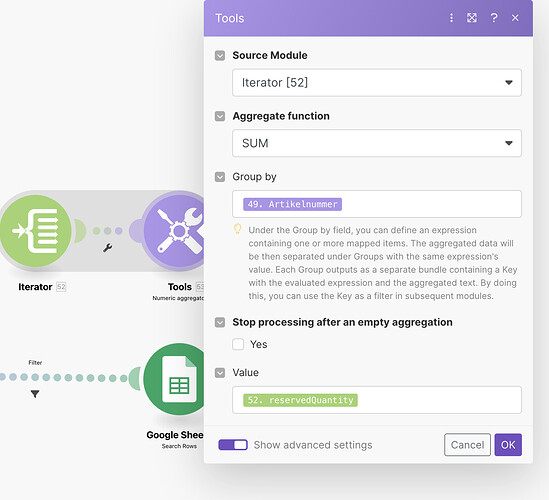

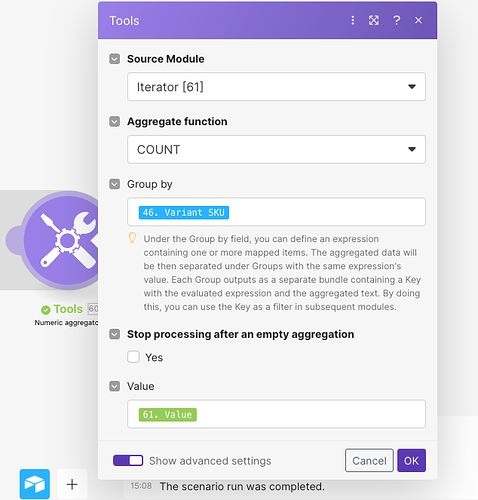

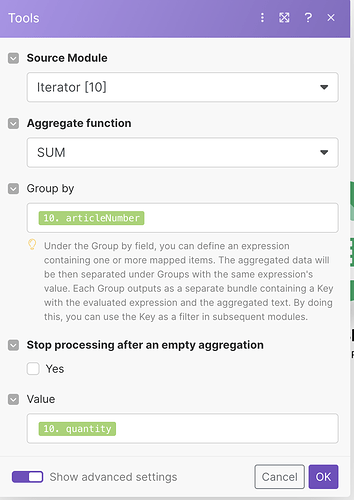

yes. You can just add a “numeric aggregator” behind the iterator and group by the desired identifier.

1 Like

Hi @Dusticelli,

Thank you for your reply.

I cannot get my scenario working the way that you have. From looking at the “Explain flow” I think it should be correct, but my quantities aren’t being summed (or counted), and I end up with the same amount of rows as I input.

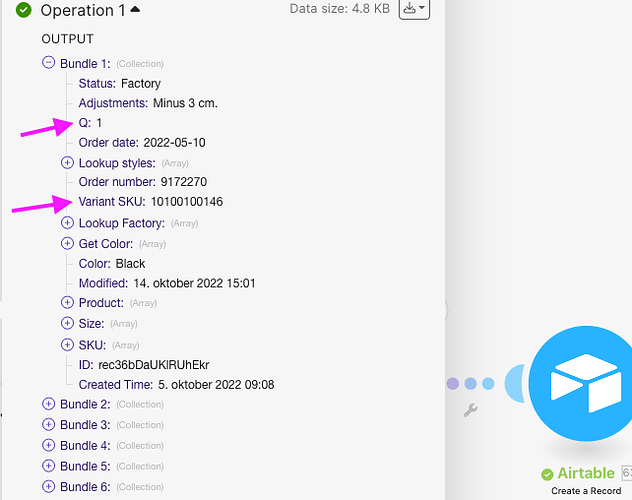

When I fetch my rows from Airtable, each line has a quantity field and a “Variant SKU”-number which identifies the specific product, like so:

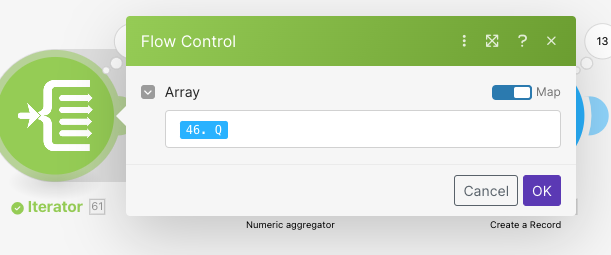

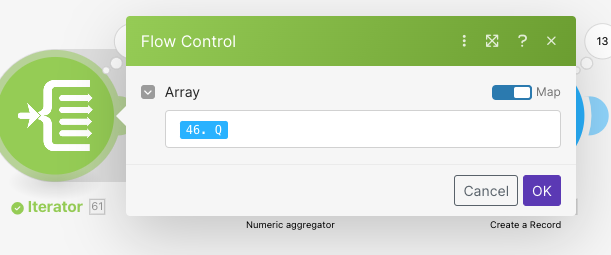

In the Interator I have input the “Q” field which holds the quantity

The Numeric aggregator I have tried to setup like yours

Since the Iterator seems to require an array, and I’m guessing my quantity field isn’t really an array, maybe that is why this is not working?

Thanks!

Hi @Lars_Bach ,

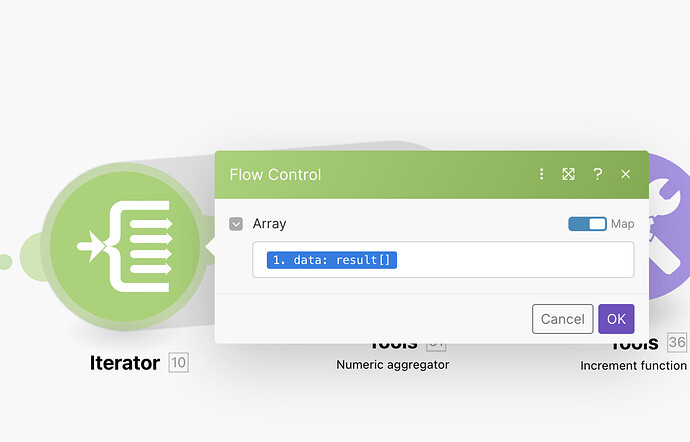

this is how I group the data, hope this helps you:

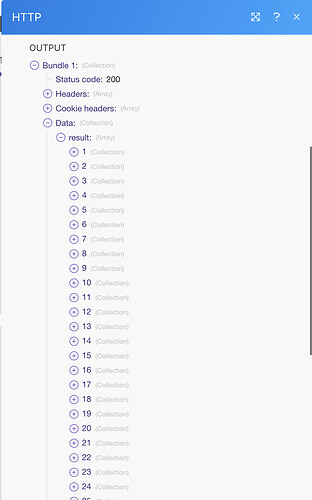

When I fetch data from my API I get a couple of “collectionts” within a nested “result”. These are the items I need to iterate, because each collection represents (in my case) 1 product on a individual batch level. saying that, it means same product may have multiple entries in the collections list, one for each different batch, with the related quantity.

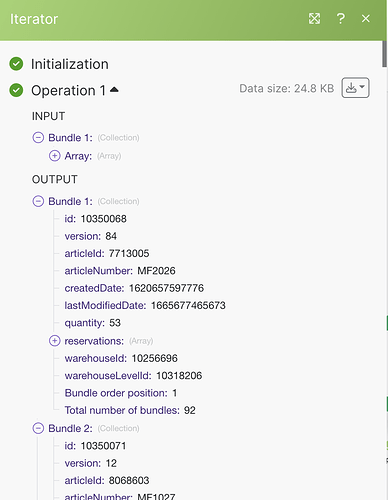

Then in the next step, I iterate these collections to get 1 Bundle as the output for each collection

finally to sum those quantities from possible multiple entries of the same product with different batches, I “group” that by articlenumber (in my case)

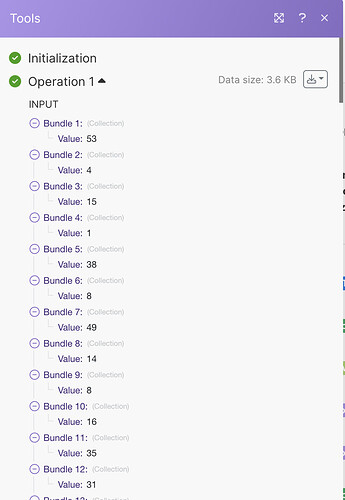

and as a result I get the summed up value for quantity of each articlenumber

@Lars_Bach

I think you don’t need an iterator, if the ouput from your airtable is already providing bundles for each product (SKU-Variant) you want to group.

Just try to use the numeric aggregator directly after the “watch records” module.

Hi @Dusticelli,

Thanks so much for the detailed walkthrough!

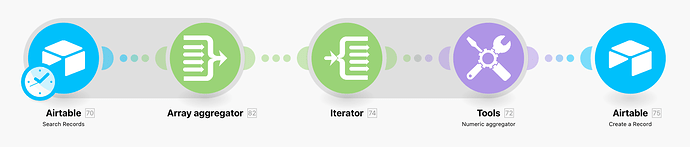

I was finally able to get it working using the following:

Maybe it is overkill, but that’s no concern right now, since we only need to run it once a week.

Thanks again for all your help, highly appreciated!

1 Like